From many years ago, hades has been running as my fileserver with an LVM array of disks.

LVM is a Linux feature that allows to use more than one disk in just one volume, and offers no protection on a disk failure.

And disks failed, a lot of times, and thanks to the luck, a simple cloning of the old disk to a newer and bigger one solved the problem.

And the LVM growed, and its filesystem growed, and it is time to update it.

The LVM contained the following disks:

Two Seagate ST31500541AS (1500 Gb each), one Seagate ST3750640AS (750 Gb), one Samsung HD501LJ (500 Gb) and one Samsung HD401LJ (400 Gb), making a total of 4650 Gb of storage (minus partitioning, LVM and XFS overhead).

It was time to migrate to a safer thing, so I bought the following:

5 hard disks, 5 SATA USB enclosures and a more powerful 800W power supply unit. It's not that hades' 600W PSU had any fail, but I prefer to have more than enough power, because when I assembled hades for the first times it did have a lot of power outages, because a bad quality PSU was unable to give the promised power, and the hard disks started to stop randomly. This day hades received his nickname, "the troublemaker".

It is also the time to put a new SCSI card, the spare Adaptec AHA-2940U2.

This controller substitutes the previous Adaptec AHA-29160N SCSI card to drive the IBM Ultrium LTO2 drive used to backup all in Claunia.com. The old controller used to hang on power on, on transfer, and finally died absolutely (again, "the troublemaker").

The USB enclosures are to put the old LVM hard disks, do the migration, and then have spare external drives to use from time to time (friends are requesting me to gift them, forget about it).

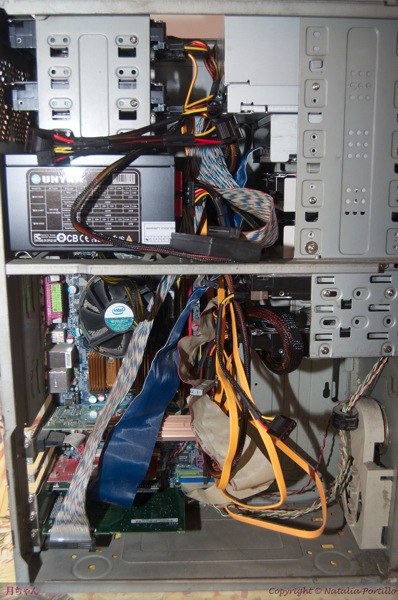

Everything put in place and connected, hades is a mess of cables. The orange ones are the SATA cables connected to the new disks, the gray one connects to the system disk, the blue one to the DVD-ROM drive and the multicolored one is the SCSI cable, connected to the tape drive and the terminator.

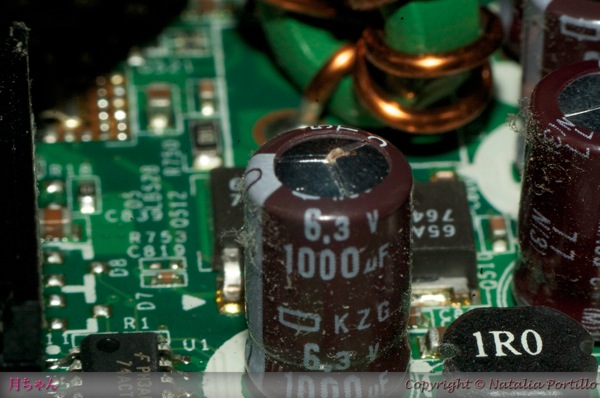

Everything assembled it's time to power up, and then, hear "beep beep beep", great! First I though it was that the graphics card was loose, however a visual inspection showed the following:

At first it's difficult to see, but in close inspection:

A leaked capacitor. Again the troublemaker honors its name.

It has been running for months in this condition, now is time to fail. So I had to buy a new graphics card.

A super-uber ATi Radeon HD 3450, the absolutely max in performance. Yes, I'm kidding, this is a fileserver, I don't care the performance of the graphics card, it's just I did not had any working one at hand and this was the cheapest available. It's also the first time I assembly an ATi graphics card in a machine, I always use nVidia ones.

But hades still did not want to boot. Now, a RAM stick was malfunctioning, so putting it out, halving the RAM of hades to just 1Gb. Troublemaker never rests! However everything closed by then for Easter, and will have to wait until monday to get more RAM.

Finally it booted, so I connected 4 of the LVM disks on the USB enclosures and one on the only available SATA port on hades motherboard and started the fun.

Linux booted correctly, except for KDE, expected as it was configured for a nVidia card, not an ATi one.

The drives order was a complete mess. System disk moved from sdf to sdk, and the rest of the disks got in messed order (SATA, SATA, USB, SATA, USB, so on), but the old LVM volume mounted without a problem.

I created one "Linux RAID" partition inside a GUID Partition Table inside each new disk, and created a RAID5 using each freshly created partition as a unit.

RAID5 is a system that joins 3 or more disks, sparing the data between all of them, and creating recovery data that is also spared between the disks. The total recovery data equals in size one of a full disk, so in my case, I used 5 Seagate 2 Tb disks obtaining a RAID5 of 8 Tb of total available space (and 2 Tb used for the recovery data).

It's quite easy to create, just did

mdadm --create --verbose /dev/md0 --level=5 --raid-devices=5 \

/dev/sda1 /dev/sdb1 /dev/sdc1 /dev/sdh1 /dev/sdi1

and in less than a minute the RAID5 was created.

As soon as the RAID5 was created, the whole 2 Tb of recovery data started to be created, slowing down all operations. So that to create the XFS filesystem, it took 5 minutes. I don't want to imaging the time creating an ext4 filesystem will take, as it is more than 50 times slower than creating a XFS one.

I did a test copy of 37 Gb of data from the LVM to the RAID5, and it took 28 minutes. Simple math say that's 1.3 Gb/min. and as I have to copy 3900 Gb that makes 50 hours, yes, two days and more.

I don't think USB enclosures have ever been tested for a full 50 hours runtime, neither their PSUs, but we'll see how this goes.

I pray that The Troublemaker gives me 50 hours of peace and no problems :p